Welcome to the blog for my new research project! Except that it’s not really new: like many research projects, this one pulls in some threads I’ve been working on for some time, often at the edges of my other projects. I don’t plan to recycle content here, but for my inaugural post it seems appropriate to return to something I posted back in 2016 as an invited response to the Modern Language Association’s Committee on Scholarly Editions recent white paper, “Considering the Scholarly Edition in the Digital Age.” The article-length post below originated as remarks for a roundtable at the 2016 Society for Textual Scholarship conference in Ottawa, and was published in expanded form on the CSE’s blog. (My thanks to the conference organizers and the CSE for the invitation to contribute.) Readers looking for a short version are invited to skip down to the numbered items in bold—in fact, point number 5 happens to be the premise of my Veil of Code project, about which I’ll be posting semi-regularly (and more concisely) here. You could call this a road map for how I arrived from my earlier work in Shakespeare and digital editing to this new project.

*

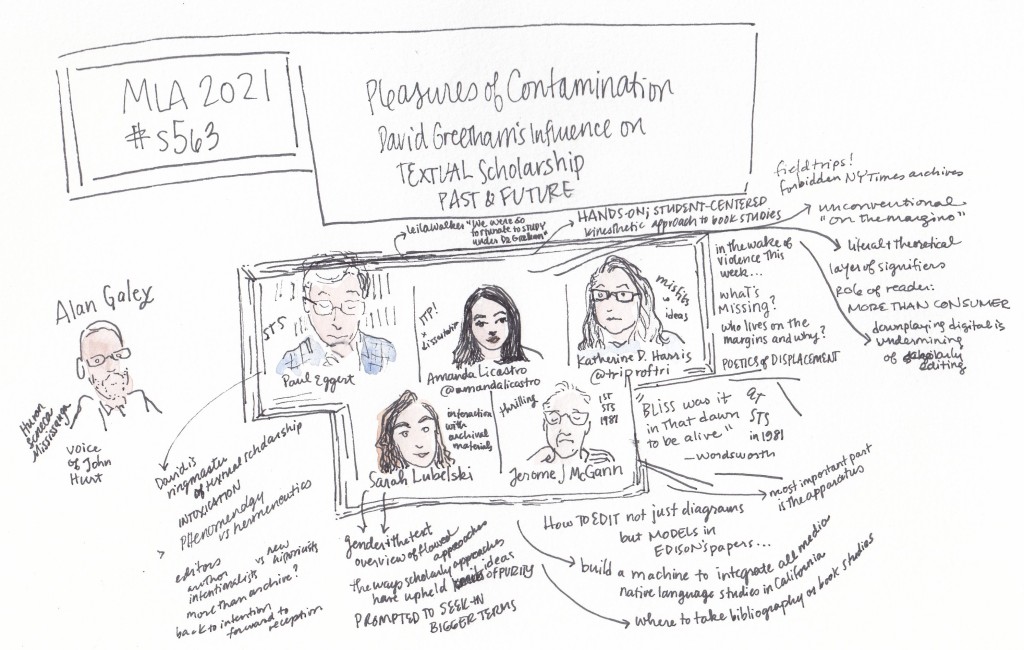

When I first read the CSE’s white paper “Considering the Scholarly Edition in the Digital Age” I couldn’t help but think back to David Greetham’s pointed review of an earlier statement on digital scholarly editing sponsored by an earlier incarnation of the CSE. The collection Electronic Textual Editing was published by the MLA in 2006, with open-access preview versions of its contents available thanks to the Text Encoding Initiative. Prefacing its commissioned original articles are two largely uncontextualized statements issued by the CSE in the past: “Guidelines for Editors of Scholarly Editions” and “Guiding Questions for Vettors of Print and Electronic Editions”. Greetham’s review praises the strengths of individual articles in this collection — I continue to reference and assign some of them myself — but also skewers the confused logic and tone of the collection as a whole, calling it an “ex cathedra statement from the policing organization of our discipline(s)” (133). Greetham’s vigilance against the subtle creep of institutionalized orthodoxy was not out of place, then or now. Yet the CSE’s most recent white paper is refreshingly free of any doctrinal tone. Instead, as our co-panelist John Bryant says in his post, it’s “a delightfully diplomatic document.” Its framing as a white paper, not guidelines or best practices, marks a clear invitation to discussion and debate, with all topics on the table.

Discussion and debate — the informed and intelligent kind — is exactly what we need as digital humanities increasingly becomes the context in which textual work like scholarly editing takes place. My aim in this post is to consider ways to raise the level of that debate. Before unpacking five specific ways we can improve the conversation, I’d like to compare and contrast how that conversation has unfolded in the CSE white paper and in another, far more contentious document.

In the weeks since our roundtable took place at the Society for Textual Scholarship (STS) conference in Ottawa, I’ve been thinking about the CSE white paper in context with another recent public invitation to debate: a controversial Los Angeles Review of Books piece called “Neoliberal Tools (and Archives): a Political History of Digital Humanities,” by Daniel Allington, Sarah Brouillette, and David Golumbia. No doubt the LARB article would have animated our discussions in Ottawa had it appeared slightly earlier — though whether it would have raised the level of discussion is another question, to which I’ll return in moment. Notably, both documents converge quickly on the same specific point. The LARB authors express it in their opening, when they associate digital humanities with a “discourse [that] sees technological innovation as an end in itself.” The CSE white paper, published just a few months earlier, points to a similar concern when it cautions that “the digital is neither inherently a site of innovation nor a necessarily useful innovation in itself.” Good advice, especially when it comes from textual scholars who study the longue durée of textual transmission and its technologies.

One of the crucial differences between the two pieces is that the LARB article takes “Digital Humanities,” as such (with capital letters), as its object of analysis. That, I believe, is a mistake for anyone wishing to raise the level of discussion. After more than 15 years of doing research that could be categorized as digital humanities (and using the label sometimes myself as a tactical term) it’s not the term or the work that I find increasingly unsatisfying; it’s the baggy logic of the category itself. To be clear, I could never imagine walking away from many kinds of work that people call digital humanities, as the LARB authors seem to advise, and as Alan Liu promptly critiqued them for doing. Rather, it’s that I’m more interested in exploring how my own digital scholarship refracts into more specific topics, like born-digital bibliography and humanities-oriented visualization and the pedagogy of text encoding and the labour of digital scholarly editing and reading media history through Shakespeare. Scholarly editing, the focus of the CSE white paper, is likewise specific enough to support an intelligent conversation.

So while I’m sympathetic to what Allington, Brouillette, and Golumbia were trying to accomplish in their critique of neoliberal labour practices and the digital humanities — in which scholarly editing is most certainly implicated — I’m also disappointed that the article that resulted fails to raise the level of debate about textual labour in the academy. As other commentators have pointed out, the LARB piece was published with several structural weaknesses: its history is patchy and selective, allowing parts of the digital humanities to stand in for some imagined whole; it underplays the politically and socially progressive work done by many archivists and scholarly editors; it’s awkwardly fixated on what seem (to me, at this distance) to be local and personal disputes originating at the University of Virginia; and — this is where it really lost me — it lumps one of the best textual scholars and critics of digital instrumentalism and neo-positivism, Johanna Drucker, in with the very things she critiques so well (here, for example, and especially in her first two chapters here). (See also Juliana Spahr, Richard So, and Andrew Piper’s insightful and measured response in the LARB.)

One lesson we might take from this comparison of the CSE and LARB documents, then, is that textual scholarship, as a field which studies the intersections of labour, culture, and technology, would be a good starting place to mount an intelligent critique of… well, maybe not “Digital Humanities” but a more constructive and specific framing of the structural problems that the LARB authors have in their sights. For example, Amy Earhart and our co-panelist Peter Robinson have recently critiqued certain misconceptions about textual scholarship that are prevalent among many digital humanists. The political implications of their critiques, which are solidly grounded in the history of scholarly editing, should be of interest to those who looked for a more substantial analysis in the LARB piece.

Indeed, the discussion to which the CSE white paper is contributing would be improved by a frank and evidence-based critique of specific, identifiable tendencies in the digital humanities, just as the STS community questioned the orthodoxies of the New Bibliography many years ago. (I’d like to think there’s some shared intellectual DNA in the current #transformDH community, which the LARB article puzzlingly neglects to mention.) But we need to do the homework for that critique to be substantive and translatable into action. That homework includes looking beyond the United States to understand what’s being called digital humanities, looking beyond the post-2009 blogosphere/twitterverse for the writing that matters, and looking beyond English departments. Scholarly editing, after all, is not entirely about literature, just as textual scholarship is not entirely about scholarly editing.

On that note, the LARB piece does usefully raise the question of the “us” who’s having the conversation about textual scholarship and labour in the twenty-first century. Like Johanna Drucker, I’m a humanities scholar based in an Information school. Does that mean I have an “idiosyncractic relationship to the humanities,” as the LARB authors say Drucker does? (I certainly hope so: all my favorite colleagues are idiosyncratic…) And like Matthew Kirschenbaum in his response to the LARB article, I find myself less concerned whether I’m a digital humanist by someone else’s definition, and reflecting more upon the not-entirely-categorizable experience of doing digital textual work — the kind that the CSE white paper is concerned with. For better or worse, my own experience means that I don’t view scholarly editing, bibliography, book history, or any of the CSE white paper’s topics with a literature department as my primary frame of reference. Yet one might argue that textual scholarship itself has an idiosyncratic relationship to the humanities. It can certainly be a welcoming field for those who do.

So, based on my own idiosyncratic experiences of doing digital textual scholarship in and out of English departments over the past 15 years or so, here are five suggestions for ways to raise the level of discussion, or at least take it somewhere new:

1. Don’t get hung up on the question “what is a (digital) scholarly edition?”

The CSE white paper spends a lot of time on this, motivated by a well-intentioned desire to open rather than settle the question. But as my book history students once told me in one of my first classes at the University of Toronto — after I’d glibly thrown “what is a text?” down on the seminar table a few times too many — we can play the definitional game all day and get nowhere. It’s more useful to shift the question into the gerundive: not “what is a scholarly edition” but “what is scholarly editing?”; and by extension, not “what is an archive?” but “what is archiving?” The gerund makes it an activity, which humanizes the question by making it about agents.

This point is motivated by my own experience in seeing the history of technology differently when I stopped focusing so much on computers, and more on computing. The history of textual technology becomes a lot richer, more plural, more critically provocative, and simply more interesting when computing as a range of socialized practices isn’t fettered to a single essentialized device. (Hence another of my complaints about most definitions of digital humanities: they construe the meaning of digital far too narrowly, even as they attempt to broaden the humanities.)

What, then, can we learn by focusing less on editions and more on agents who carry out the work of editing and archiving? Who are the overlooked editorial and archival agents of the digital age? Is it time to question the perceived status of the scholarly edition as the telos of textual scholarship generally? What do we gain and lose by conceiving of textual scholarship in the form of large digital editing projects, whose shape and aims are so often determined by the needs of funding, infrastructure, and project management? If one is a textual scholar who wants to do new work in digital form, is a large digital scholarly editing project going to be the only game in town?

For me, the answer to this final question is not necessarily, but my work is increasingly focused on all of these questions — and without the distraction of worrying about what a digital scholarly edition is or isn’t.

2. Use the word archive carefully, and acknowledge the scholarship of archivists.

The CSE white paper bases much of its argument on what it identifies as the trend “towards the creation of an edition as a single perspective on a much larger-scale text archive.” That distinction needs more thought. Archives are fascinating places, which I’ve learned from researching in them and helping to train future archivists at the University of Toronto’s iSchool. But those who do archival work know that we both gain and lose by embracing the word archive too readily and applying it too broadly. To take an analogy from another field, imagine what any museum curator or museum studies scholar must think whenever they hear a lunch buffet described as “well-curated.” The object of my criticism here isn’t theorists like Derrida, Foucault, or Agamben, who have helped to introduce the archive as a multivalent term in poststructuralism, nor is it the building of the resources that John Bryant calls “digital critical archives” in his post on the CSE blog, which are developing into valuable new forms of textual scholarship. Indeed, the meaning of the word archive has long been a moving target. For what it’s worth, I wrote the first two chapters of my book The Shakespearean Archive as as attempt to bridge the archival, editorial, and poststructuralist understandings of the term, and with no desire to police its meaning.

Rather, the problem is the widespread tendency to invoke the term archive indiscriminately, sometimes without much acknowledgement of its history or of the particulars of archiving and archival research. Whenever we yoke the terms edition and archive together, whether in opposition or on a continuum, we need to remember the conversation between disciplines that should be happening as well. But with the increased currency of the term digital archive in the digital humanities, it’s become all too easy to neglect the fact that archivists, like editors, have a rich and active scholarly literature of their own in journals like Archival Science, Archivaria, and American Archivist, where they sometimes even engage topics in our field like scholarly editing. (See Paul Eggert’s and Kate Theimer’s critiques of similar tendencies from the editorial and archival sides, respectively.)

Failure to engage the scholarship of actual archivists, whether among digital archive-builders or their critics (like the LARB authors), can lead both groups to underestimate the political stakes of the work that archives, and archivists, perform. Like Kirschenbaum, who makes a similar point in his response, the archivists that I work with don’t need to be told that archives are a critical construct in which power is exercised, where ideology quietly shapes the work of memory. Some in their field have already written the book on the subject. The CSE white paper at least avoids active misrepresentation of what archivists do, but the white paper and LARB article alike perpetuate a long-running failure to engage archiving — and archivists — beyond metaphor.

The loss is ours. If more literary scholars, editors, historians, and digital humanists actually read and referenced the scholarly literature of archivists, we’d find a wealth of thinking on concepts like provenance, original order, respect des fonds, the nature of records, authenticity, and the materiality of cultural production. (Here’s a good place to start.) What we would learn from these archival concepts, and from the intellectual traditions behind them, is that archives are edited. That’s a crucial point of context for reading the CSE white paper, which presents an edition as something decanted from an archive, and implies that the completeness of the archive is what allows editions to be selective, critically partial, and intellectually risk-taking. Yet actual archives are equally defined by their incompleteness: missing records, embargoed correspondence, conjectural orderings and groupings of records (which may or may not reflect the actual practice of those who produced the records), and the limitations and strengths of their finding aids (which are created by archivists who are just as human as scholarly editors).

In short, if we’re thinking of an archive, digital or otherwise, as a structure that exists prior to editing and other critical interventions into the documentary record, and which represents completeness rather than selectivity, and which doesn’t have interpretation built into its very bones, then we’re failing to learn something fundamental from archivists themselves. We might still refer to our digital projects as archives — no field or profession owns the term — but it would be a measure of progress if we treated the term as one that our digital projects have to earn.

3. Don’t let data become the default term for all digital materials.

As with the indiscriminate use of archive, the same tendency with the word data is one I find especially counterproductive, such that I wrote the final chapter of Shakespearean Archive (“Data and the Ghosts of Materiality”) largely as an argument against the fetishization of the word by some (but not all) digital humanists. The problem is simply this: when the CSE white paper says that “a critical edition … draws its data from [an] archive,” or when, say, a text of Hamlet is referred to as data to be mined, the word data becomes an ontological steamroller that flattens out our discourse. Textual scholars have spent decades, even centuries, developing nuanced language for the materials that we study. Just think of the different resonances of the word text, let alone edition, book, version, witness, record, variant, fragment, imprint, issue, paratext, inscription, or incunable, to name a few. There’s a wealth of nouns, plural and singular, that textual scholars use to name the world of our materials, and to gain some semantic purchase on that world.

And yes, data is another of our plural nouns: bibliographers and book historians were doing quantitative humanistic scholarship decades before it was fashionable in the post-2009 digital humanities, and today one can’t pursue certain questions in these fields without measuring the widths between chain lines, or determining the price of paper, or calculating the financial risks of publishing playbooks, or compiling databases of sales figures, book prices, and reprinting rates. These are the forms of data that my field has been using for decades, along with other kinds of evidence, including texts — all of which require interpretation, and never speak for themselves. Data as a word is hardly something new in my own parts of humanities, contrary to claims made by some DH proponents and critics alike that the concept of data is inherently strange and threatening to humanists.

When we use the word indiscriminately, when any digitally transacted material becomes data in our language and thinking, we face three problems: 1) it becomes easy to forget what any social scientist worth her salt would tell us, which is that data are generated, and they can be generated well or poorly, but they are never just neutral stuff waiting in the ground to be mined; 2) it becomes too easy to avoid the more technically precise terms I’ve mentioned that keep us honest and reflect the ontological diversity of our materials (see above); and 3) we replace those granular terms with a word that, after the mid-twentieth century, cannot be detached from its connotations of positivist and instrumentalist ways of organizing knowledge and labour. On this last point, one imagines the LARB authors would rightly point to data’s use — or, more accurately, co-option — in neoliberal management philosophy.

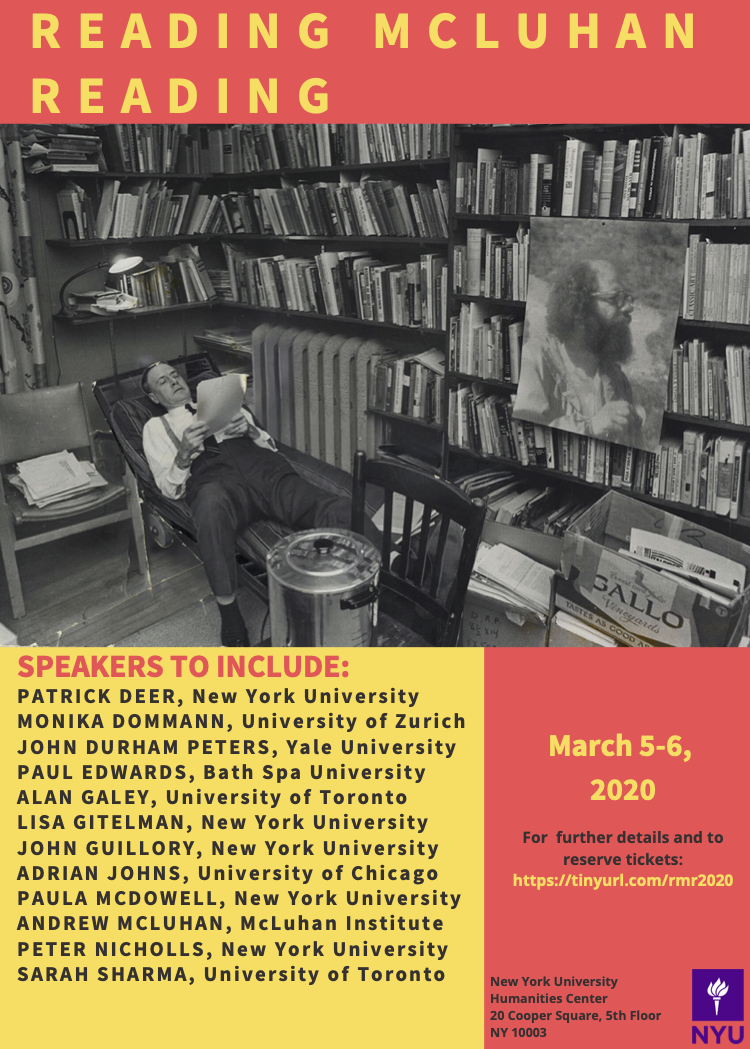

The history of this problem is too big to elaborate here, but in short, the reasons for data‘s unhelpful ubiquity are partly rooted partly in the history of programming (i.e. the innocently named “data files” that were too big to hold in active RAM), and partly in (yes) the early 21st-century neoliberal university and the language of managerialism. See the articles in Lisa Gitelman’s collection Raw Data Is an Oxymoron for more historical context and substantial questioning of the term, and Christine L. Borgman’s excellent Big Data, Little Data, No Data for a detailed survey of the term’s deeply contextual meanings in various disciplines today.

It’s bad enough when university administrators and academic policy-makers latch onto a term like data and let it do their thinking for them (remember excellence?), but textual scholars — having debated years ago whether bibliography is a science — should understand better than most humanists what’s at stake in letting other fields like computer science define our ontologies for us, even quietly through the casual borrowing of language. As with the word archive, when we say data we should mean it.

4. Don’t be too quick to generalize about “the digital age.”

Like print culture and, for that matter, digital humanities, the “digital age” of the white paper’s title has become too easy an object for generalization. Though I use the term myself, I try to avoid it for the same reason that I avoid using “the digital” as a strategically vague adjectival noun (as in “the humanities need to embrace the digital”; digital what, exactly?). One problem with the premise of the CSE white paper is that it begs the question; that is, from its title on down it takes digital technology to be the dominant characterizing feature of scholarly editing in the present. What if it had instead begun with the more open-ended topic of “the scholarly edition in the present”? We would certainly need to discuss digital technology as an agent of change, but it’s not the only one. In 2016, editing and archiving alike are also happening in an age of truth and reconciliation commissions and new understanding of aboriginal and minority literatures, of editing as a cultural practice, of emerging forms of academic and textual labour, of changing models for review and publication, and of new ways of thinking about canons, canonicity, and authorship, to name a few other currents that shape our field in the present. I can understand the need to limit the focus to do justice to a given topic, but when accounting for the changing contexts of scholarly editing in the present, we need to cast the net wider than the CSE white paper does (keeping in mind that it’s just a white paper).

And lest any reader grumpily demand at this point “how does any of this help me identify press variants? just give me my collation software! and stay off my lawn!!” it’s worth pausing to reflect on what editing, bibliography, and other kinds of textual scholarship are for; whose texts we edit, and why (or why not); and what relationship between past and future, mediated by the transmission of texts, that we are helping to shape in our research and teaching. These questions may reasonably lie beyond the scope of the CSE white paper, but neither should we let “the digital age” limit our focus to digital tools when we think about editing in the twenty-first century.

And, finally, on that note…

5. Scholarly editing in the present doesn’t just mean using digital tools, and publishing via digital networks, but also editing born-digital texts.

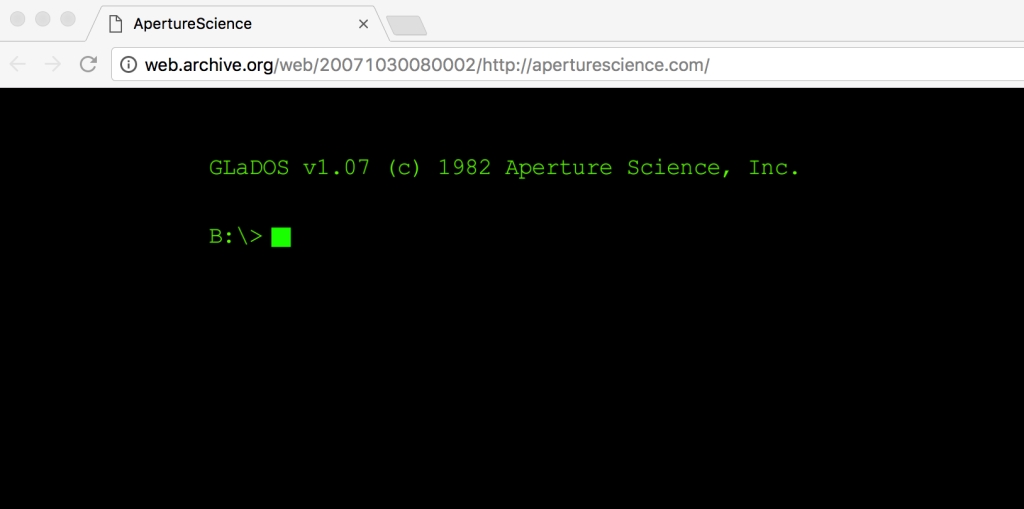

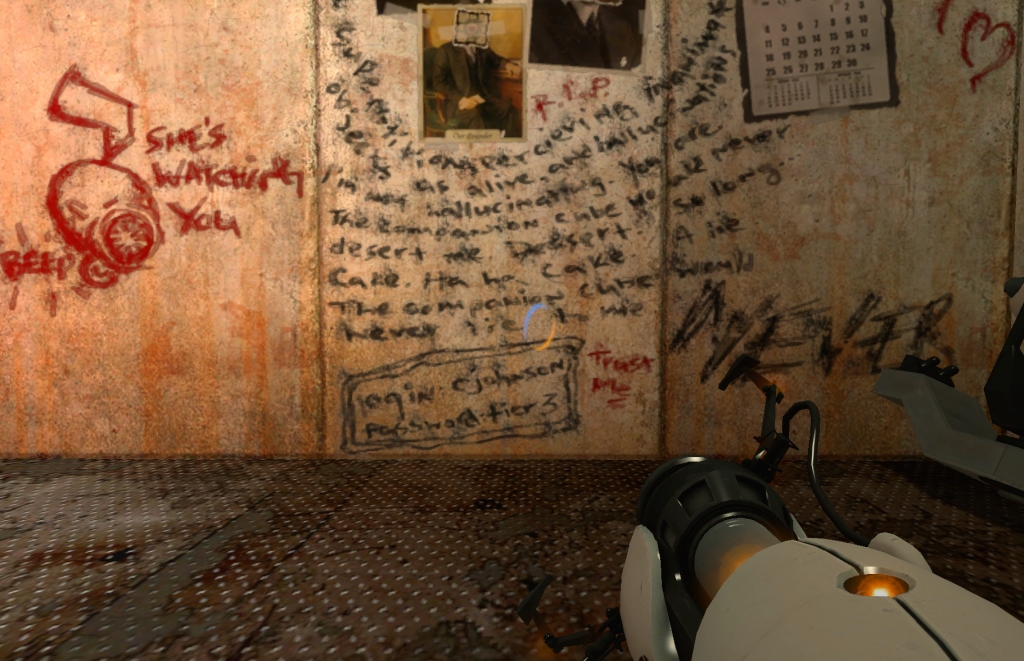

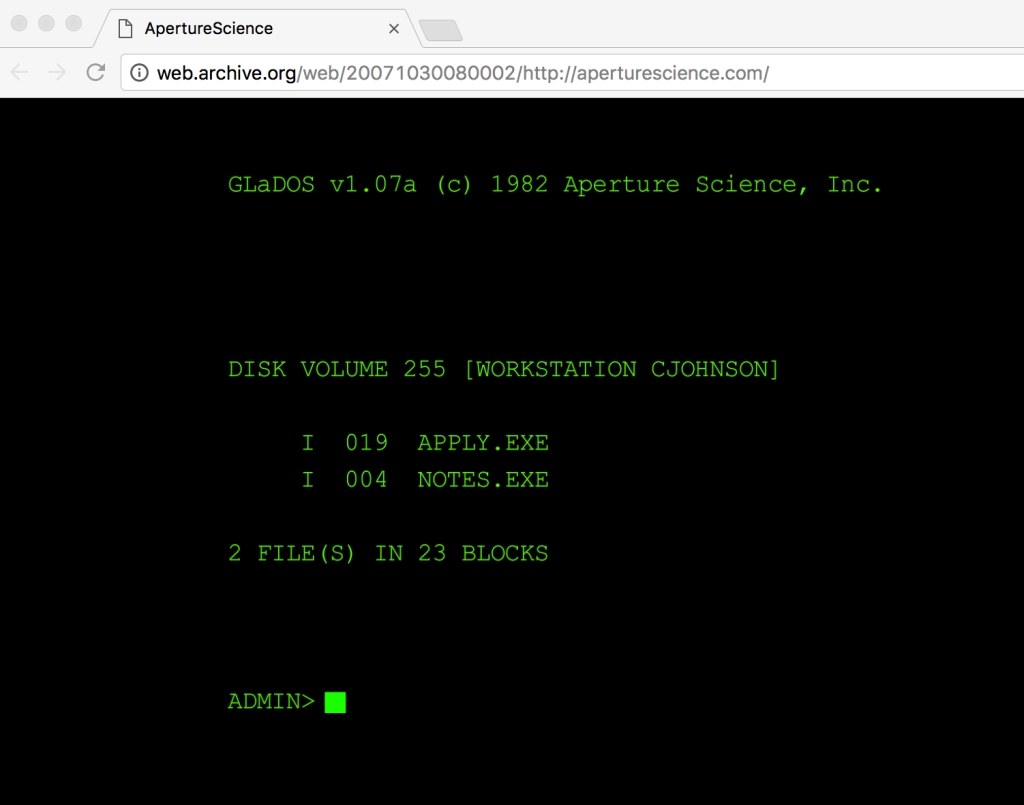

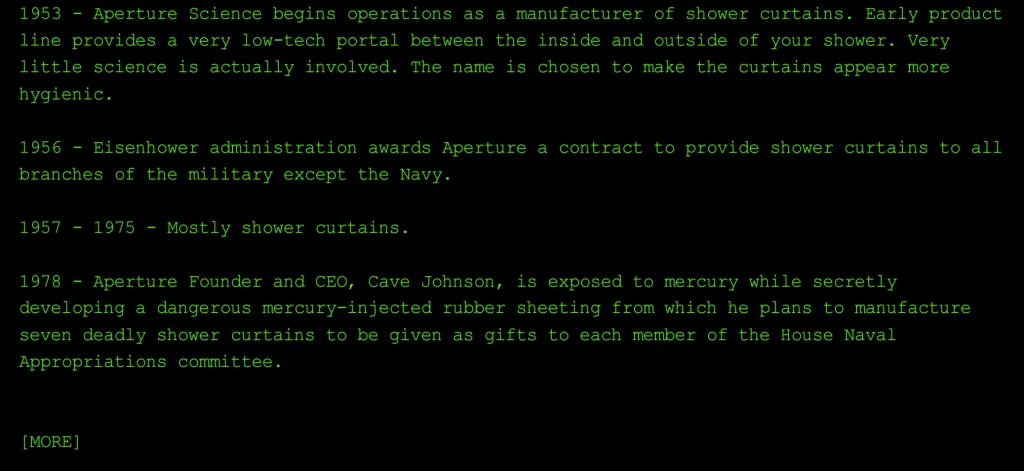

This seems to be a genuine blind spot in the CSE white paper. It makes no direct mention of how editors should face the textual condition (or .txtual condition, as Kirschenbaum calls it) of the late-20th and 21st centuries, which will be overwhelmingly digital in terms of how authors and other agents involved in textual production make the texts we edit and study. Any scholarly editor working on an author from the 1980’s to the present may well be dealing with word processor files (and disc images, and actual discs), and anyone interested in the scholarly editing of electronic literature or video games or e-books or digital records of musical theatre or digital (re)publication of public-domain print texts will obviously be working with very new kinds of materials for textual scholarship. The closest we get to this question in the CSE white paper is a passing reference to N. Katherine Hayles and Jessica Pressman’s useful concept of comparative textual media. But overall the white paper — which, remember, is titled “Considering the Scholarly Edition in the Digital Age” — contains barely a hint that we might need to edit materials from the digital age.

I don’t attribute the white paper’s silence on this topic to conservatism. That would be out of step with the CSE’s position overall, and John Young clearly flags the importance of born-digital artifacts in his earlier CSE blog post. But the silence in the white paper is puzzling. Perhaps it says something about the dominant trends in scholarly editing and digital humanities alike. I can’t help but think again of Greetham’s critique of the Electronic Textual Editing collection, especially his point that “the absence of painting, dance, film, television, video games, music (about which there has been some very challenging critical discussion of late [i.e. 2007]) makes this collection almost relentlessly text- (or linguistics-) based” (135). I’m also reminded of Andrew Prescott’s argument that digital humanities needs to concern itself less with tool-building and tool-use, and more with the study of born-digital artifacts themselves, along the lines D.F. McKenzie advocated. Other fields and organizations such as STS and SHARP (the Society for the History of Authorship, Reading, and Publishing) have been genuinely welcoming to digital textual scholarship. Likewise, David Greetham’s indispensable textbook, Textual Scholarship: an Introduction (which was cited in the white paper), and the recent Cambridge Companion to Textual Scholarship (which wasn’t) both deal head-on with the challenge of the born-digital — the latter in a chapter by Kirschenbaum and Doug Reside with that very phrase as its subtitle. It’s also a promising sign that Kirschenbaum’s new book, Track Changes: a Literary History of Word Processing, will no doubt be at the top of many textual scholars’ reading lists this summer [i.e. 2016].

A study like Kirschenbaum’s could not have been written without digital tools, but perhaps it’s time to question the primacy of the digital tool in DH curricula, funding models, and in our thinking generally. A deliberate focus on digital materials, not just digital tools and editions, would be consistent with the CSE’s evident desire to broaden the way we think about scholarly editing. It would also be consistent with the MLA’s history of championing a broad-minded conception of the materials that presently need to be understood and preserved for future scholarship. (See the 1995 report on the “Significance of Primary Records,” written by an MLA committee chaired by G. Thomas Tanselle.) What we now call digital curation and preservation were only beginning to gain steam in the mainstream scholarly imagination when that report was written (see Jeff Rothenberg’s “Ensuring the Longevity of Digital Information,” first published in Scientific American; note the Shakespeare example!). Today digital curation offers a new way for textual scholars to serve the public good by helping to preserve, understand, and critically interrogate the materials that comprise our digital cultural heritage. In doing so, I suspect textual scholars may find their own answer to Alan Liu’s pointed query, “Where Is the Cultural Criticism in Digital Humanities?”. For me, this mission, far more than passing enthusiasm to hook scholarly editing into big data or social media, is where I find reason to feel excited, motivated, and not a little humbled and challenged by what lies ahead for textual studies.

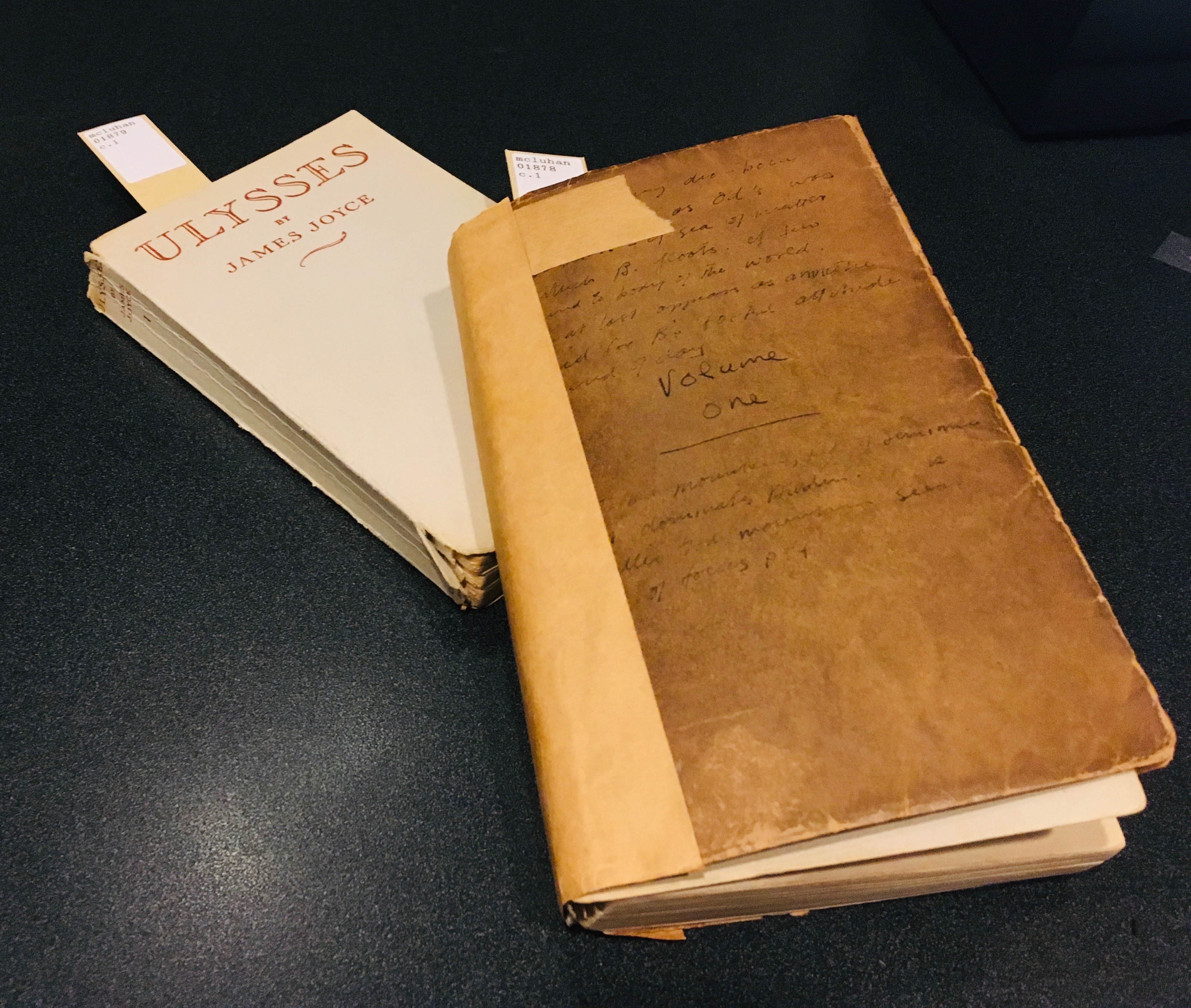

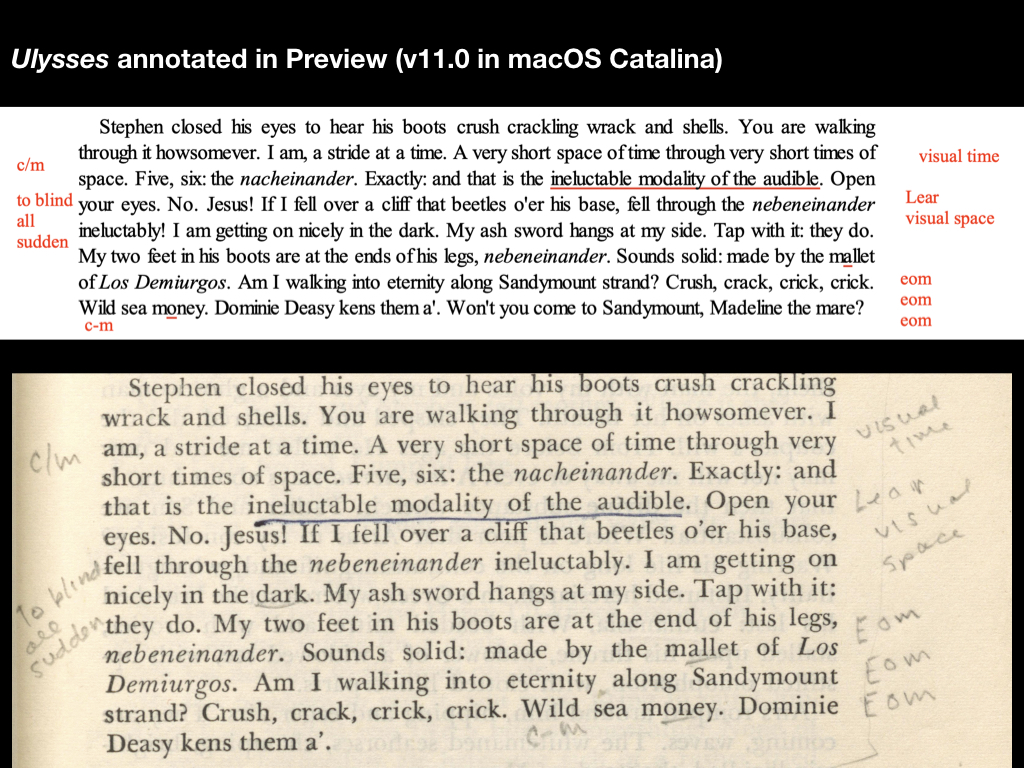

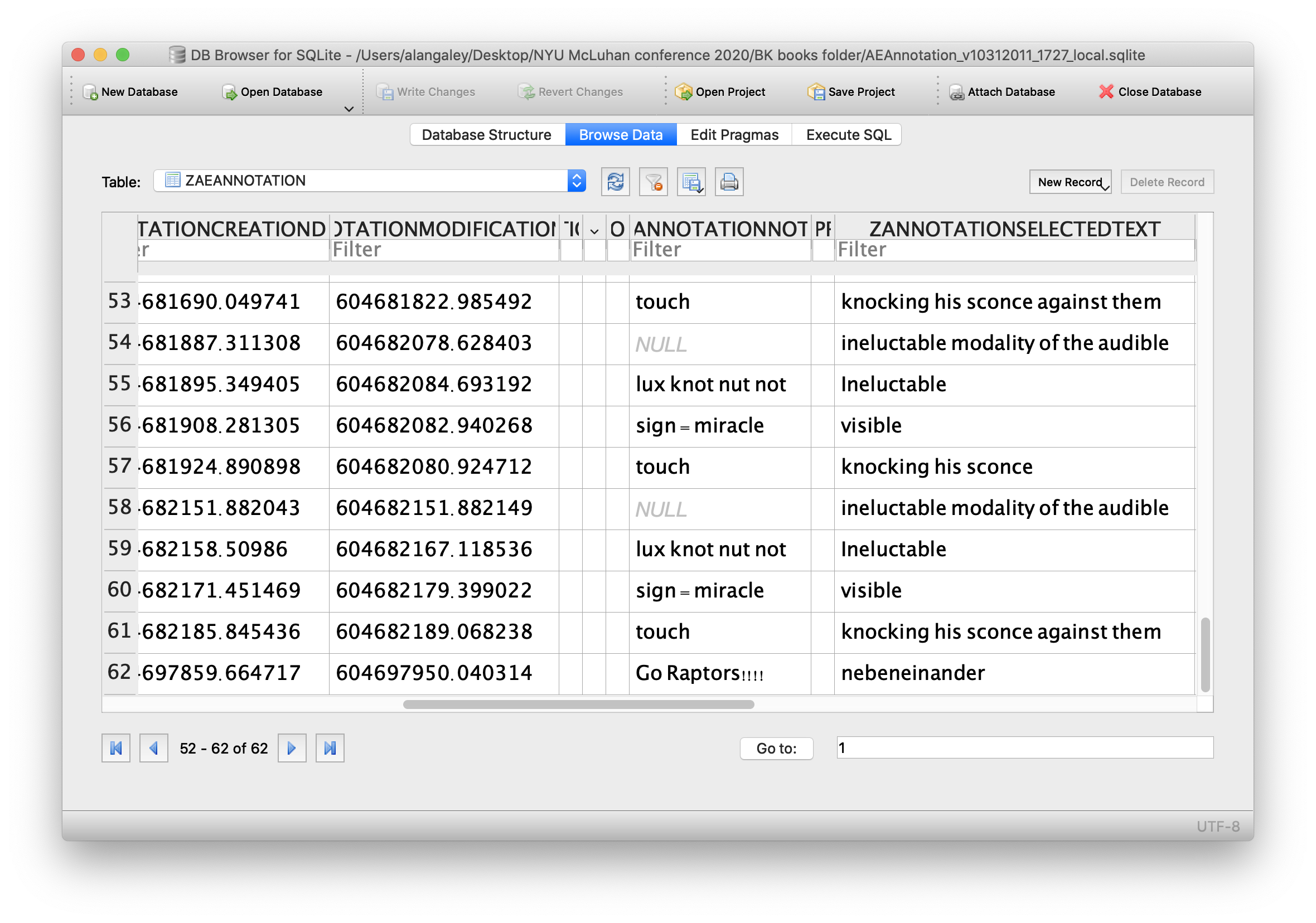

It’s good motivation to stop writing excessively long blog posts and get back to writing my own next book, tentatively titled The Veil of Code: Studies in Born-Digital Bibliography, and to look forward to teaching courses this coming year that deal with the future of the book and born-digital applications of analytical and historical bibliography. At some point in those classes, we inevitably re-enact one of textual scholarship’s primal scenes: a group of students in a rare book library, gathered around a book or other textual artifact on a table, together using the evidence of our senses to examine the paper stock, readers’ marks, scribal construction of mise-en-page, dog-eared leaves, the bite of letterpress into paper (sometimes vellum), or changing representations of authorship in successive editions. We imagine what questions we might ask of the object, and what questions it asks of us. We consider the relationship between evidence and interpretation. We train our eyes to see how form effects meaning. If we can’t read the text, we slow down and puzzle it out if we can. Some students take pictures on their smartphones, others simply reach out to touch a parchment leaf that still transmits information centuries after a scribe prepared it to receive writing (which is why we only touch the uninked parts of the leaves). And, throughout, there is always a dialectic between the textual artifact on the table and the bustling twenty-first century city street visible just outside the window.

With that imagined scene as context, I’ll end with this small thought experiment: consider what difference it makes to this scene if digital technologies are admitted into it exclusively, or even primarily, as tools, or as data sets, and never as artifacts on the table themselves. What difference does it make for those students, and for the social world outside the library that they rejoin when the class ends? If we are seriously to consider the scholarly edition in the digital age, or the politics of digital textual labour in the neoliberal academy — or even both topics together — then we must consider these questions, too.

Alan Galey

Faculty of Information

University of Toronto

Maple Leaf Gardens (where Barilko played with the Leafs), the Toronto audiences would usually go nuts. The image on the right comes from a video of a 1995 concert in Maple Leaf Gardens, apparently shot with a video camera hidden under a coat, and shows the climactic moment when the song was first performed on Bill Barilko’s home ice on the Day for Night tour. Barilko’s banner would be visible to the crowd thanks to the stage lights which illuminate the arena during each chorus. (You can find the video

Maple Leaf Gardens (where Barilko played with the Leafs), the Toronto audiences would usually go nuts. The image on the right comes from a video of a 1995 concert in Maple Leaf Gardens, apparently shot with a video camera hidden under a coat, and shows the climactic moment when the song was first performed on Bill Barilko’s home ice on the Day for Night tour. Barilko’s banner would be visible to the crowd thanks to the stage lights which illuminate the arena during each chorus. (You can find the video

You must be logged in to post a comment.